On 10th November 2020, I followed Bergler ICT’s second session about Docker in a series of 2. The sessions were quite short (1 hour) and hence were more an introduction to Docker and Kubernetes. For me, that was ideal since I only knew Docker by name before then and even had never heard from Kubernetes before.

Bergler ICT is a small company that offers ICT services like software development, application life cycle management, DevOps, consultancy, and managed services. Because Bergler ICT is based in Breda, the Netherlands, everything was in Dutch.

In this session, they explained what Kubernetes is, how it works, and why it is so useful.

Kubernetes

Before the hosts started talking about Kubernetes and its advantages, they did a short recap of session 1. They repeated very fast what Docker is and how orchestration works.

The benefits of orchestrators like Kubernetes are their scalability, self healing superpowers and zero-downtime deployments. Self healing is done by spinning a corrupt container down and back up again, while zero-downtime is realized by spinning a backup container up before the corrupt container is spinned down.

They also shared a link to a course on Kubernetes’ core concepts on Pluralsight.

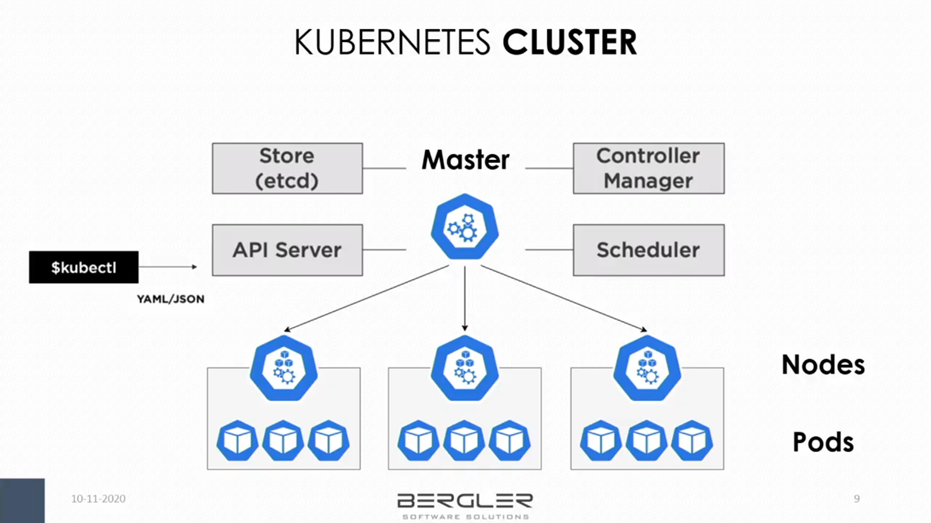

Then they explained the components of Kubernetes. The smallest entity is called a pod. A pod can consist of 1 or more containers and runs on a node. A node can thus be considered the container of the pod.

Besides pods and nodes, you also have clusters. This is the biggest component of Kubernetes and consists of a master with multiple nodes, that can have multiple pods. A cluster is often unique for every environment you want to run your docker in. It can be configured manually via the kubectl CLI or automatically via a managed service.

Managed services

At some point, you’ll have to decide if you are going to configure your Kubernetes yourself or if you going to let the configuration be done by a managed service. In the end, it is a decision between freedom and comfort.

Should you choose a managed provider, you should be aware of a possible vendor-lock-in. But, of course, this is the faster solution. Manual configuration is much slower, but then you have more freedom to tweak your settings.

The challenges with databases and key vaults in docker projects were shown with the help of a demo. Databases in a container are not so useful because of the reset of their content after every “reboot” of the container. Furthermore, the storage of secrets like keys in the container is just not secure.

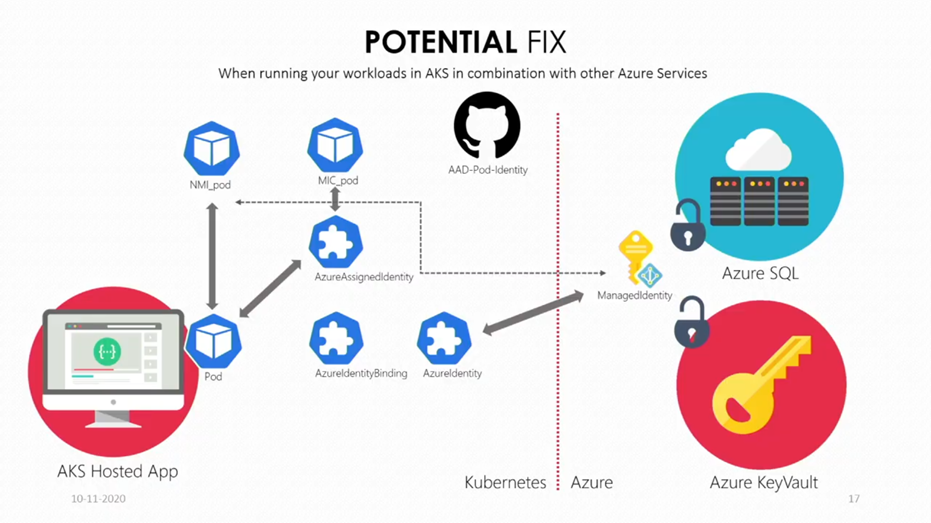

Both of these problems can be solved by putting both the database and the key vault in the cloud. In this session, they used Azure as a specific example.

In their example, they used Azure SQL and Azure KeyVault. If you already have an Azure database and an Azure key vault to store your data and your keys, it’s only a small step to Azure’s managed service, Azure Kubernetes Service (AKS).

But both Azure SQL and Azure KeyVault have 1 common big problem. For both, you need credentials, of course. But those passwords are often put in the connection strings in plaintext. That way every developer can see the password. Should someone get fired, you would be obliged to change every password. This would be doable if you have only 1 container, but most of the time that’s not the case.

A solution to this would be managed identities that have specific rights. To implement those in Kubernetes, you need AAD-Pod-Identity, an open-source project from Microsoft. When installing AAD-Pod-Identity, it will install a Daemonset as well. This one will install a Node Managed Identity (NMI) pod on every node of your Kubernetes. The Daemonset will also install a Managed Identity Controller (MIC) pod. Both types of pods are needed to get a connection between your own pods and the ManagedIdentity from Azure. For the connection itself, you need an assigned identity and access tokens. The MIC pod gives you an AzureAssignedIdentity and the NMI pods give you access tokens.

Because the explanation of this solution was so complex and abstract, they clarified it with a short demo. First, they showed the problem with the plaintext passwords in the connection strings. Then they switched to another branch and showed us the changes in the code. They did say you need to initialize the AzureIdentity and the AzureIdentityBinding yourself. The identity binding itself happens based on the name of the identity and the label aadpodidbinding in the YAML file. They also warned us to not forget to configure Azure in such a way that Kubernetes and the identity have access to the database.

They concluded the demo with a summary of the advantages of managed identities. The most obvious one is of course no hard-coded credentials or secrets, but that’s not the only benefit. Managed identities also make it way easier to enforce security policies.

The video with the demo can be found here. The demo itself starts at 28:01. (note: the explanation is in Dutch without subtitles)

Questions

Before ending the session, they answered some questions from us from the live chat.

Someone asked in what scenarios it is useful the implement Kubernetes on top of Docker. The hosts answered that question by telling it’s only useful in the enterprise world when you have a complex application with a lot of containers. If it’s getting complex and needs networking, then you should consider Kubernetes.

The last interesting question was if you can split the security for each pod. They said that that’s certainly possible. If you make different identities for certain pods then the pods with different identities won’t be able to access each others’ data.

After that last question, they thanked us for listening and then shared the links of AAD-Pod-Identity and the blog of Robert te Kaat as extra learning resources.

Review

I learned a lot in these 2 sessions for it being only 1 hour each. Although I don’t think I would be able to make a docker app right now, I am glad that I know now more about how it works.

I am not a programmer, so I will probably never use it out of myself. But I should ever need Docker for work in security or AI, I will at least understand some of its concepts already. And I think that that will prove useful someday.