I learned a lot about artificial intelligence in the past few weeks. They talked about getting started in AI, even without experience. Then they also dived a little deeper into computer vision and NLP.

In this session, advanced AI was on the agenda. At first, I thought this would be a difficult session to follow along, because of the word advanced. But that was not the case. The word advanced didn’t refer to the difficulty of the session, but to the sophistication of the projects they realized with AI.

note: This is the last event in a series of 4. Please read my first post about the October Sessions to learn more about getting started in AI. Or read my second post and third post to learn more about computer vision and NLP respectively.

29th October – Advanced AI

This was a very interesting and diverse session. The hosts, Alicia and Willem, and the guest speakers talked about how colleagues can now meet each other in their virtual workspace thanks to XR and how AI drones are used to save lives. At the end of the session, they also talked about AI responsibility and optimization.

Of course, we got several resources and tips as well, just like in the previous session.

Generating XR spaces from maps

The first guest speakers were Jay Natarajan and Fergus Kidd from Avanade. They explained to us how they are using AI to make smart spaces.

Jay started by explaining how they use AI to bring XR to life.

1 on 3 business leaders make critical decisions without the information they need to do so. And even when they use that info, they first lean into a personal experience, then they use analytics, and lastly they go to a collective experience before they make a decision.

One thing we learned from the pandemic is how important it is to interpret the data across the whole value chain. When the reports started to come out no one was on the same page. None of the analyses matched. The data was inconsistent and not all the data was available. This meant that when the data was digitized it needed to be cleaned. A solution to this problem would be an AI analyzing the data.

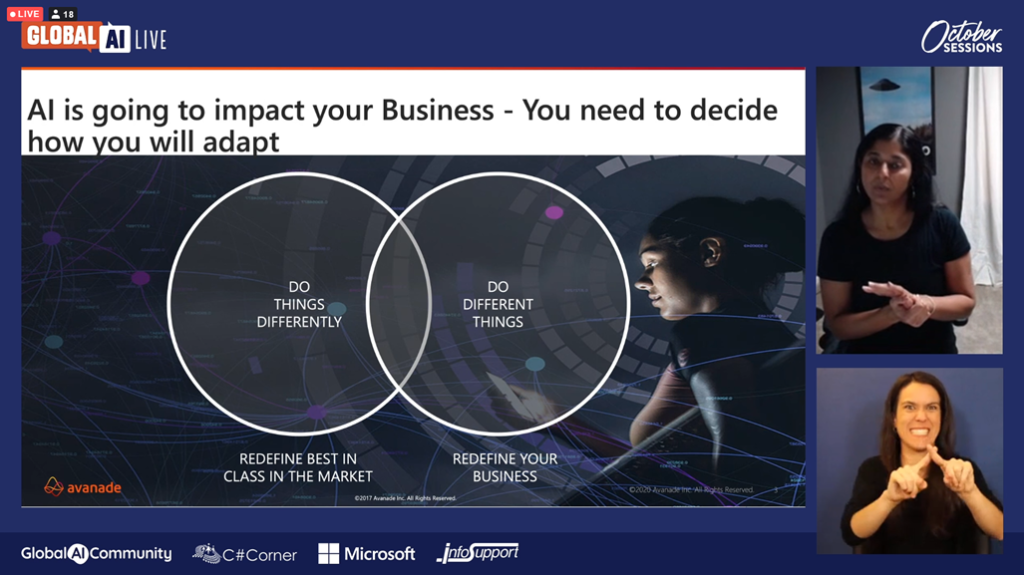

Jay ended by saying that AI is going to impact every business and that it is up to us to decide how we are going to adapt. We will have to redefine how AI is going to help us do things differently and how it is going to help us do different things as well.

After Jay’s intro, Fergus showed us what they have done around smart spaces in Avanade so far. He showed us what technologies they are using and what they are doing with it.

Firstly, he explained what XR is. XR, or Extended Reality, is a combination of augmented reality (AR), virtual reality (VR), and mixed (MR). XR provides experiences without boundaries and is often used for safety in manufacturing and mining. XR can also be used for skill learning in a realistic setting without dangerous or expensive equipment.

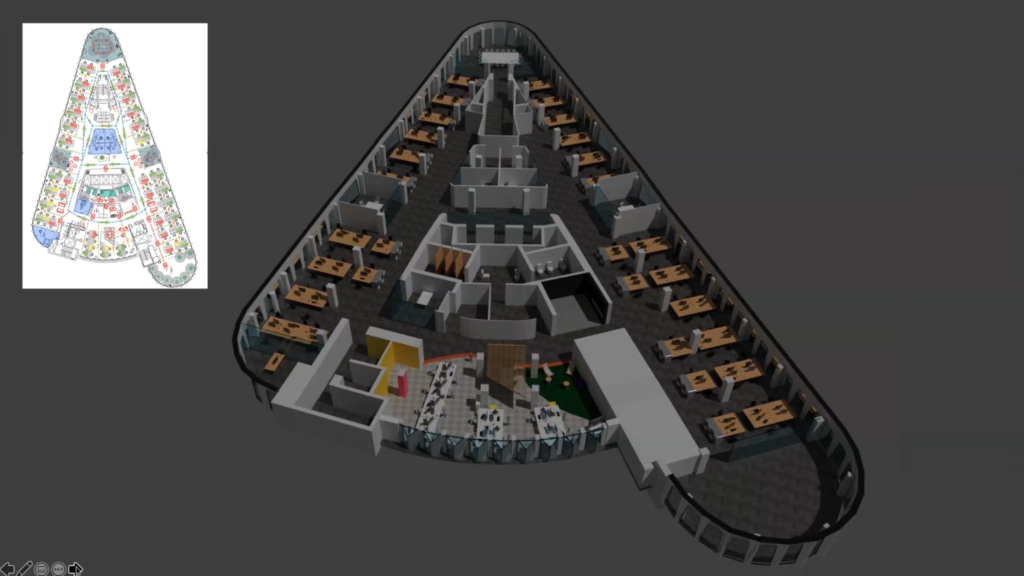

Then he wanted us to show how we could use XR to connect distributed teams and their former workplaces, referring to our current situation. He illustrated this with his first demo, where they used a digital twin to let colleagues interact with each other in their virtual work environment.

A digital twin is a virtual replica of a physical entity. In this case, a digital “smart” map of a physical space.

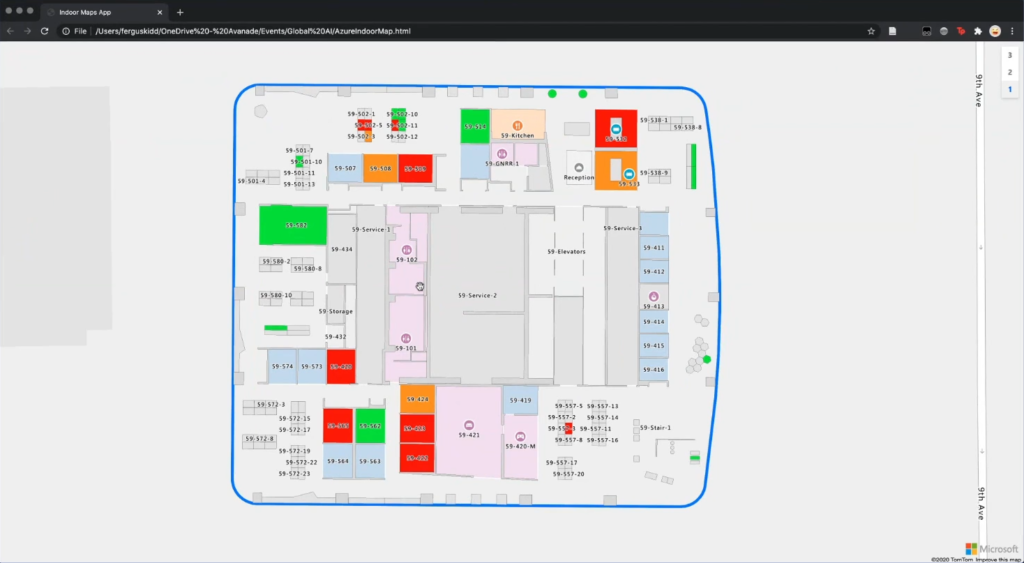

Avanade started with Azure Indoor Maps that contained architectural diagrams (“smart” maps of real buildings or rooms). These diagrams contain all necessary information about the physical space like the temperature, the occupied chairs and tables, bookings, etc.

After that, they expanded the idea by making 3D maps so people can see what the building is going to look like if it hasn’t been built yet. That way people can get familiar with a building before they have actually been there.

Later on, they wanted to make it possible for people to walk in these virtual spaces. This idea is derived from the pandemic and the need to reconnect with our friends, family, and colleagues. They started experimenting with procedure generation so they could easily add objects like laptops in the virtual space to make it less empty and thus more realistic.

To end his presentation, he also gave a short demo of Microsoft’s flight simulator, blackshark.ai. Here they created very realistic 3D maps of the world that could estimate the height of the buildings.

As you can see, XR spaces can be used for literally anything we want. The options are limitless.

Panel discussing

In the panel discussion, Alicia and Willem introduced their next guest, Anthony Bartolo from Microsoft. He works a lot with Hololens and with drones.

Anthony began by stressing out that technology more and more uplifts the opportunity to solve a problem, instead of being the cornerstone behind it. Companies think less about how they can use a certain new technology and more about how existing technologies can solve their current problems.

To underline his statement, Anthony then used his drone project as an example of that (more about this later in this post). What was done to make that happen was based on the opportunity, not on the technology. The technology, like custom vision, didn’t even exist in his current form. The technology is adopting to our needs.

Willem then wanted to talk about virtual trips, as an extension of Fergus’ presentation. Anthony explained that we are not that far away from this because even smell and touch can already be simulated in some way. The touching part is still quite primitive, but how long will it take to even realize that? It all depends on our imagination and our creativity.

Before going to Anthony’s presentation about using AI drones to save lives, Willem wanted to know what Anthony and Fergus had as tips for the audience.

Fergus advised us to work with small iterations and to look at the main goal to decide what could be the best approach. He then recommended Unity for game development, Blender for 3D creation, and YouTube for tutorials in general.

Anthony echoed the idea of learning from YouTube videos, but he also recommended Microsoft Learn because there you actively learn things step by step.

Applying AI to save lives

Anthony Bartolo gave a very interesting presentation about his drone project at Microsoft, in partnership with Indro Robotics.

Indro Robotics already had drones that would inspect the coast for ships in distress, but those had to fly back every time to review the footage. But when a life is in distress, every second counts. They wanted Microsoft to make the drones able to stream the footage live and on top of that even detect interesting objects in the water.

During the project, Anthony stumbled on a lot of problems, like the weather conditions. Life jackets have different colors in different weather conditions, which makes it difficult to detect them. Besides, custom vision wasn’t even commercially available yet. Luckily, he could use the service of Microsoft although it was still in his testing phase.

The training of the drones itself took 5000 hours and was done by extracting images from Bing. One of Anthony’s colleagues, inspired by the drone project, later wrote a blog post about how you can do this more automatically.

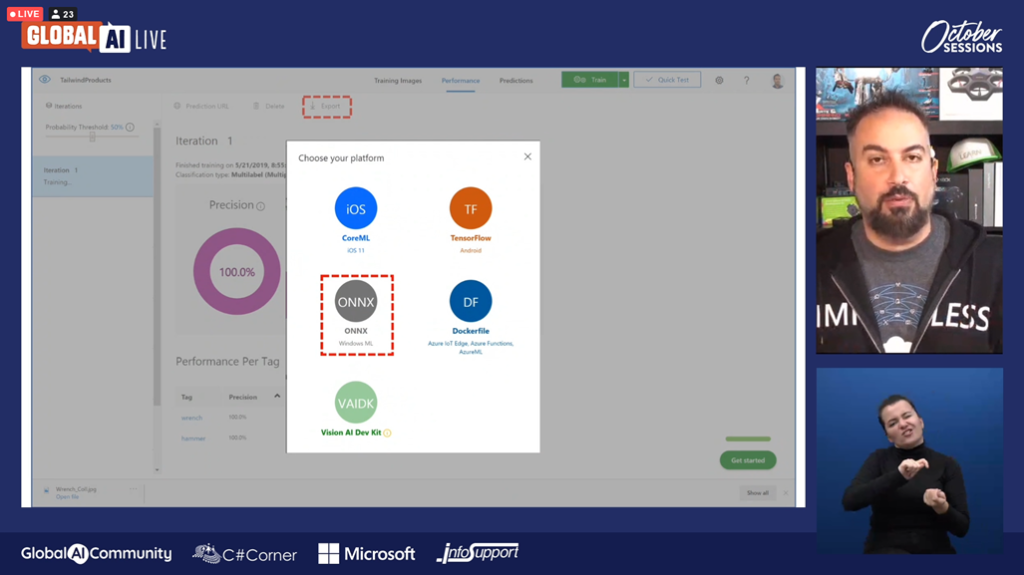

Anthony then showed us a demo of how he trained the drones to recognize life jackets in different environments. For this, he used a workbench in customvision.ai, just like Marion in the first session. For the sake of the demo, he used hammers and wrenches for the training.

Anthony then went through the iterations of the learning process of the model that had to learn the hammers and wrenches. Anthony explained to us that the recall metric is the most important in the scenario of search and rescue. The reason for this is that based on the recall value one can decide if the object found would be a life jacket or not. In the case of search and rescue, false positives aren’t that bad, so accuracy doesn’t really matter. Finding something that’s not a life jacket is still better than finding nothing.

Anthony ended his presentation by giving us some extra resources to learn from:

- His blog post about this drone project in which he explains every step

- His own GitHub where you can find the code of his drone project

- aka.ms/AIML10 to explore computer vision

Willem then asked Anthony one more question before they went to the next guest. He wanted to know how difficult it would be to recognize someone without a life jacket. Anthony explained that they used the life jacket as a marker. This would thus be the first trigger. Then they would use an IR scan to detect the heat of someone in it. Without the life jacket, the drone needs to have a better understanding of what it is looking at, and they aren’t here yet.

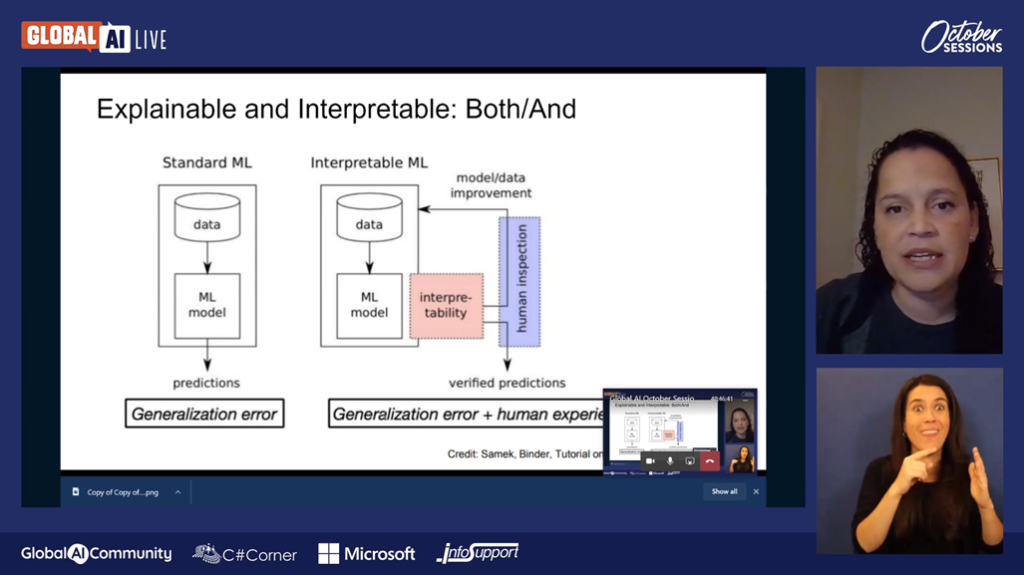

Explainable and Interpretable AI

As explained in the previous sessions, ethics get more and more important in AI. That’s how the term explainable AI arose. Noelle Silver showed us how to make explainable models.

Noelle’s presentation was based on the 6 principles of AI: fairness, reliability & safety, privacy & security, inclusiveness, transparency, and accountability.

She also explained that Explainable and Interpretable AI are both terms to describe our interest in making our models understandable and unbiased. A way to do this is to ask yourself some questions when creating a model. If you ask yourself the right questions and use the correct tools you don’t even have to worry about bias because you will uncover it on the way.

Noelle also talked about the 3 waves of AI: symbolic AI, statistical AI, and explainable AI. She explained how AI got from a set of rules to using statistics to determine confidence and how we now want to apply constructs for explanations. Explainable AI and ML are going to be essential for future customers to understand and trust AI.

She then explained 3 approaches to implement explainability:

- post-hoc / explain a given model: be able to explain your model after you or someone else made it

- ablations: drop a feature, change the prediction of it, and explain how the model reacts

- modeling feature absence: look at the prediction that’s going to be made and look at what happens when you extract a feature by using a sample

She advised us to start every model from scratch to really understand the model before automating the process with packages and toolsets. That way, one should be able to explain why their model fails at some tasks and how they can train their model to not make these mistakes again.

To end her presentation, Noelle also gave us some more resources:

- blogs:

- Eva Pardi’s blog about responsible AI with text interpretation

- Willem Meints’ blog post about Fairlearn, one of Microsoft’s new tools around Responsible AI (demo can be found here)

- Sammy Deprez’s blog post about responsible Azure ML

- her blog where she talks about Applied AI and AI for business leaders

- tools:

- interpret – explain blackbox ML

- interpret-text – explain text-based ML models and visualize the results

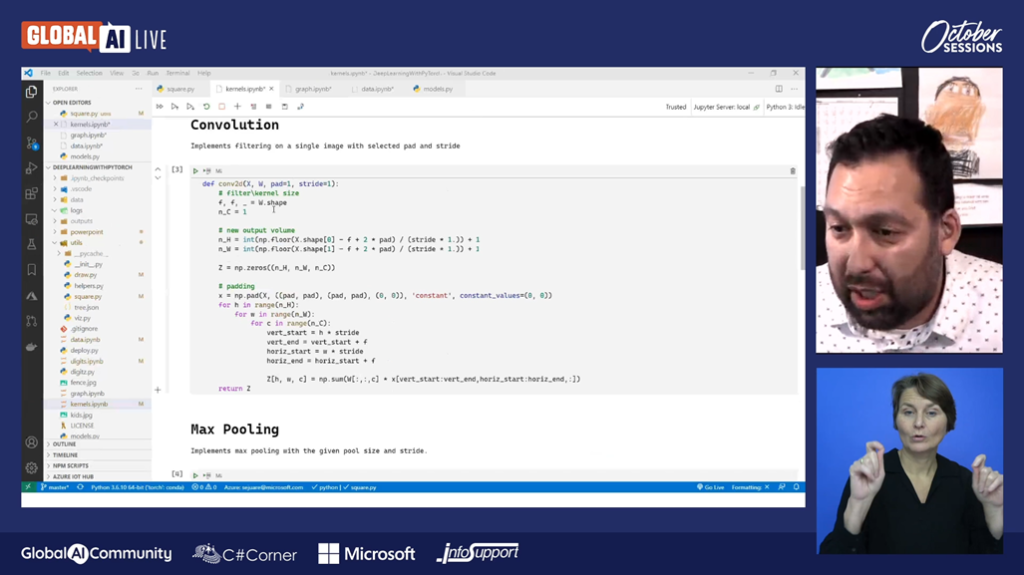

Model optimization with PyTorch

The next guest was Seth Juarez, who was one of the hosts in the first session and it was nice that he came back this session as a guest. He talked about deep learning models work.

He started by showing us how to build a cognitive service and explained to us how every part worked.

The model he showed should be able to tell, based on a picture, if it is looking at a burrito or a taco. But when he gave the model a picture of a pizza, the model was 99% sure it was a burrito.

He then explained why this model made that mistake with such high confidence.

First, he showed us how to create a model by simplifying the problem as if we were using pictures of 9 pixels to determine whether it is darker at the top or the bottom. For that, the values of every pixel are multiplied by 1 or -1 and then added to one another. Later on, a bias is added to decide if small differences are more top or more bottom.

The method for top-middle-bottom is quite similar. The result will be a matrix. These matrices can be stacked to make a neural network, but stacking won’t make a difference without adding non-linearity.

Then Seth showed us the Convolution neural network that could determine the edges of the objects in a picture by determining the darkness of the colors.

To optimize this model you need a loss function like Mean Squared Error (MSE). With this function, you can calculate the correct pixel values and biases. This is where PyTorch comes into the picture. PyTorch keeps track of all the variables and calculates the most optimal state. This is the state in which the loss function returns a value of almost zero.

But now back to the burritos and the tacos.

The reason why the model is so certain the pizza is a burrito is that it has only been given 2 choices. The training of the model consists of numerous iterations of optimization. The model will calculate the values of every pixel of the picture and iterate through the function until there are only 2 numbers left. The model is not self-aware and will thus not think about what it is doing and will only choose the highest number.

Seth concluded by saying that, when the answer isn’t correct, you can’t say the model predicted wrongly. If the answer isn’t what you expected you need to ask yourself if the model is actually trained on the correct answer and is thus able to answer correctly and if you used the correct data to train your model with.

Lastly, Seth also shared his GitHub repo where one can find the code of his demo with all of the explanations (e.n: the explanation is quite mathematical!).

Tips to make your AI responsible

In the last panel discussion of this session, and of the series as well, Willem, Alicia, Seth, and Noelle talked a bit further about explainable and responsible AI.

They all gave us some additional tips to make responsible models:

- Make sure that your model is unbiased and responsible in any way.

Make it a habit. You never know if you are building the next Facebook or something. - Make your model serve your customers.

Make sure you know who you are serving and whether your predictions are serving them. - Implement tensors and interpreters in different stages.

Implement them both in your data collection phase as well as in your training phase. That way you are the most certain your model is responsible. - Use unit tests, just like in software development.

Use them to check how unbiased the model is answering the questions and whether it is able to answer none when it is asked something like “Is this a burrito or a taco?” when it’s actually a pizza. - Don’t stop training and adapting your model at production.

AI is the definition of a perpetual beta. Update your models on the fly. - Store your inference/prediction data as well.

You can then use unsupervised ML to detect if there is a big difference between the training data and the inference data. - Test your model’s resistance against bias.

Invent a person for example that’s exactly who you don’t want to discriminate during your testing and check how your model reacts to it.

What an experience!

Now that I went to every session, I’m so ready to do something with AI! I loved how inspiring their talks were and how accessible they were to total beginners.

I learned a lot and gained a lot of insight. And I’m definitely going to look at all the resources they gave so I can maybe try to make some simple models.

So who knows? Maybe you can expect some updates about my further experience with AI 😉

To be continued!