Last week we learned how we could get started in AI, what steps to take, and what resources were available for starters. Some guests also talked a bit about cognitive services and computer vision.

In this session we dived a little deeper into AI, talking about computer vision, its technical challenges, and its models.

note: This is the second event in a series of 4. Please read my first post about the October Sessions to learn more about getting started in AI.

15th October – Computer vision

After their introduction into AI last week, the Global AI community dived a little deeper into computer vision. Guests explained some big real-time projects using it with big examples like OpenCV AI Kit (OAK) and Hololens.

This session was much more specific and therefore even more interesting. I learned A LOT and got even more resources to learn from than last week, thus of course I will share those with you as well.

I wasn’t the only one

The last session was very educational, so I was even more excited about this one because now they would get more specific. I was ready so early I was even the first one waiting for the live stream to begin!

The first one there!

But I wasn’t the only one who couldn’t wait until 7:00 pm. No, even the hosts of this session – Willem from last week and Alicia Moniz – couldn’t wait so they started 10 minutes earlier to talk about their lives at the moment during the pandemic.

One of the things Willem and Alicia talked about was AI and the Global AI Community itself and how much both have grown. The concept of artificial intelligence began already in the 1950s and it’s getting bigger and bigger recently. Right now AI is getting so important we have to start thinking about responsible AI to ensure privacy and to stay ethical. The Global AI Community has a big role in that because they educate people from around the world. And they reach a lot of people because last time they had over 10.000 people watching.

OpenCV AI Kit

Satya Mallick was the first guest of this week’s session. He talked about how he became the CEO of OpenCV, how the company has grown, and what they are achieving right now.

OpenCV is an open-source company offering a library of programming functions mainly aimed at real-time computer vision. What started as a library has grown out to an AI kit offering different algorithms.

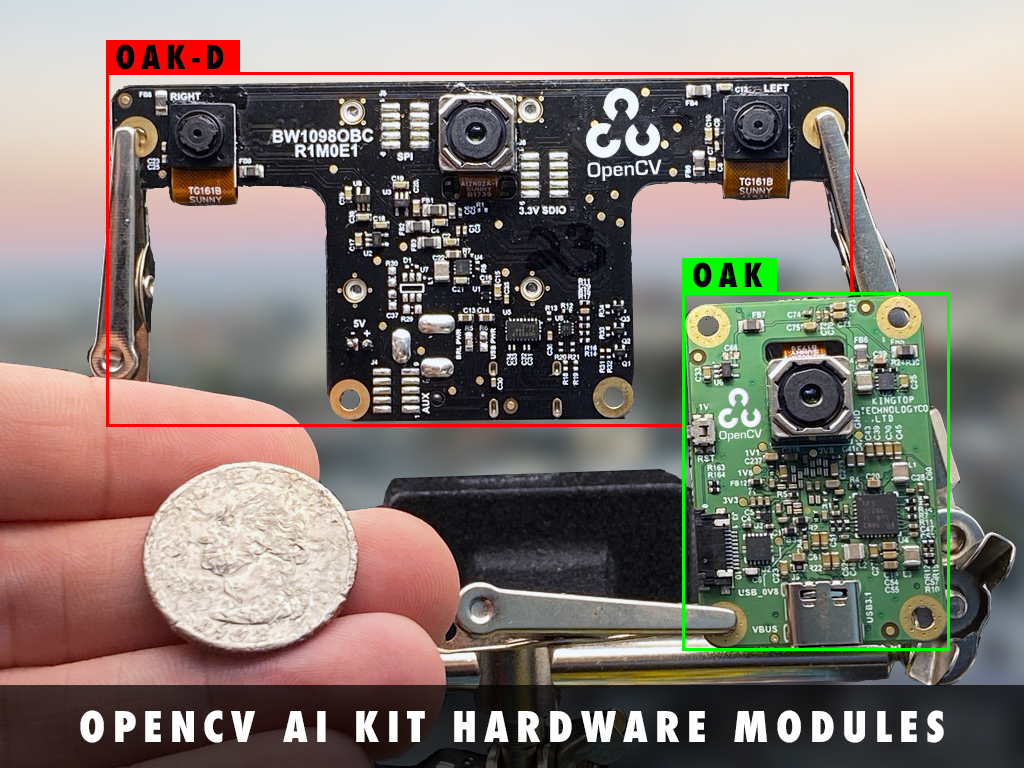

Right now, they are focusing on OAK (short for “OpenCV AI Kit”). OAK has 2 versions: OAK-1, a tiny 4K camera with an AI processor, and OAK-D, a camera that combines depth with neural inference. Both are a family of edge AI solutions, which means that AI algorithms are processed locally on a hardware device. That way images never get out of the camera, only their metadata. This local processing is possible thanks to the Raspberry Pi (RPI) and ensures better speed and connectivity, more privacy, and a lower cost.

The OAK-1 is a tiny-but-mighty 4K camera capable of running the same advanced neural networks as OAK-D, but in an even more minuscule form factor for projects where space and power are at a premium. It’s 8 times faster than previous solutions and smaller than an AA battery. The OAK-1 camera has to be connected to the neural computing stick.

The OAK-D is a spatial AI powerhouse, capable of simultaneously running advanced neural networks while providing depth from two stereo cameras and color information from a single 4K camera in the center. It can detect and locate objects and people. The OAK-D has to be connected to a host that supports OpenVINO, like a RPI.

You can pre–order OAK-1 and OAK-D right now on this site at a discount price. For both, they provide free courses.

OAK has different use cases. Think about smart glasses for the visually disabled, sports monitoring, and gun detection at schools in America.

Virtual eye vision with HoloLens

Stefano Tempesta’s presentation about the HoloLens dovetailed very well with OAK’s use case.

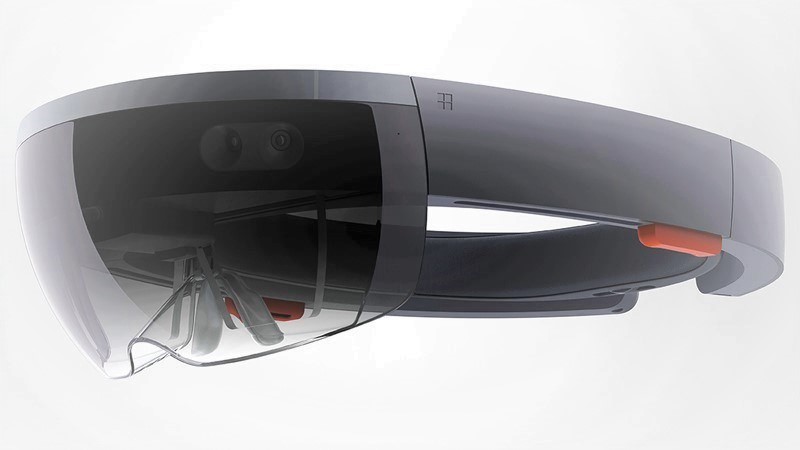

The HoloLens is an untethered mixed reality device developed by Microsoft with apps and solutions that enhance collaboration. The device doesn’t only use augmented reality (AR) but also speech detection. That way you can also use voice commands to trigger the device.

The whole thing weighs about 560 grams, has a 3D camera, sensors, a built-in computer and batteries that last for 2-3 hours when actively used, and uses Cortana for speech recognition. It responds to hand gestures and voice commands.

HoloLens 2

HoloLens 3

Stefano started by showing us an inspiring video about professor Markus Meister and the HoloLens. Professor Markus Meister and his laboratory combined augmented reality technology with computer vision algorithms to create glasses that can help blind people navigate through unfamiliar spaces.

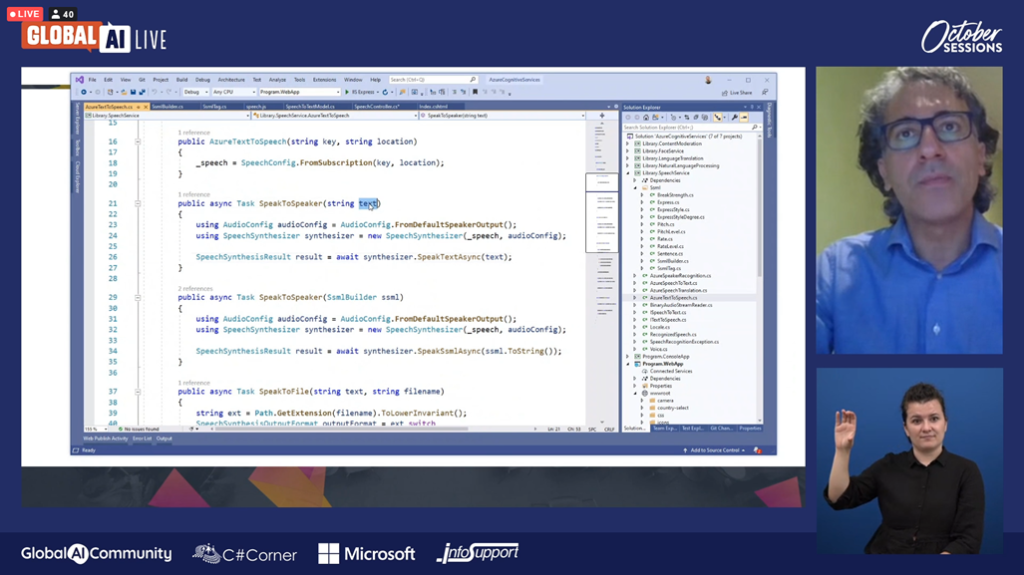

After this short video, Stefano explained to us how it was built in Azure by showing us some code and telling us what technologies made it possible to make the HoloLens.

Another inspiring story was the one about Saqib Shaikh, a blind developer at Microsoft. He combined a smart camera with speech recognition to create glasses that can tell blind people what’s happening around them. They just have to strike the glasses and the app that’s connected to the glasses will tell them everything.

A very important question raised when hearing about all thos smart glasses: what to do with misinformation or biases? Stefano told us it is indeed very difficult to remove all biases completely, but if you train the model enough you should get more accurate results. You should also make sure your training set is not biased already, to begin with. The HoloLens for example was trained on middle-aged women in the very beginning, but that was a market choice.

After his presentation Stefano answered some questions in the chat and gave us some extra resources to learn from:

- best practices and policies to remove biases from algorithms

- how to program HoloLens

- Microsoft’s position on developing AI responsibly

- Microsoft’s cognitive services (anomaly detector, NLP, computer vision, etc.)

- Stefano’s article about confidential computing (for example in the medical field)

AI in JavaScript – say what??

One of the most surprising things that we learned that day was that implementing AI in JavaScript was also possible. Even the hosts were surprised!

Milecia McGregor from Oklahoma explained to us how the brain.js library she uses made it possible for her to make a VR app in a browser, without back-end knowledge and without having to worry about data cleaning. She also showed us her code to show us how easy AI is in JavaScript because it’s just a bunch of large arrays of numbers. Even making a whole neural network is very easy because it can all be done with just one line of code!

Here a short example of what her code looked like:

// making a neural network

const net = new brain.NeuralNetwork(hiddenLayers: [4], learningRate: 0.6);

// training the neural network

net.train(trainingData);

// giving new data to the neural network

const output = net.run(newInputObject);A short tutorial of brain.js can be found here on Github.

Interaction with AI

We already have a few AI programs – like chatbots – that do human things, but it’s quite difficult to make them human-like. It’s very difficult to program human behavior into code because people are very unpredictable.

People in general aren’t complicated, but their relationships with others are. Our decisions aren’t solely based on facts and we learn from a young age to have a poker face. At the same time, non-verbal communication gets more and more important. Think about how much we care about the first impression that people have about us.

Elizabeth Bieniek told us where and how we work and whom we work with will change over time because of the increase in humanized technology.

Panel discussion

At the end of all presentations, Willem, Alicia, and Elizabeth answered some of our random questions.

Someone asked what their opinion was on our global screen addiction: will AI make us less addicted? Elizabeth quickly answered with: “No, we will just redefine our definitions of screens. Right now, those screens are our phones and our laptops. Later ‘screens’ will also mean smart glasses, etc.”

Another very interesting question was if they thought we will ever use haptic sensors, combined with computer vision, for meetings or other things.

Elizabeth told us that, in her opinion, now would be a good time because of Covid-19 but that the haptic technology isn’t advanced enough yet. Right now, we are focused more on audio and video and less on touch. But she is sure that, in the future, we will add more senses to our meeting. To support her opinion she asked us the question: “Think of yourself in the physical world, without being able to touch; how do you function? When it’s so important in your physical interactions, why wouldn’t it be important in your virtual interactions?”

Haptic technology, also known as kinaesthetic communication or 3D touch, refers to any technology that can create an experience of touch by applying forces, vibrations, or motions to the user.

Wikipedia

Willem, on the other hand, thinks that it won’t come that far – even with smart glasses in general – because now and then we need a break from technology – even as developers – because it can be very overbearing, all these technologies.

A lot to think about

The world is constantly changing and technology is enhancing every day. More and more we have to think not only about what we make but also about how we do it. The ethical aspect is getting more important than the technology itself.

This week was, again, very education and left me with a lot of things to think about. But I’m very happy with all the resources we got so I have an idea on how to start.

To be continued!